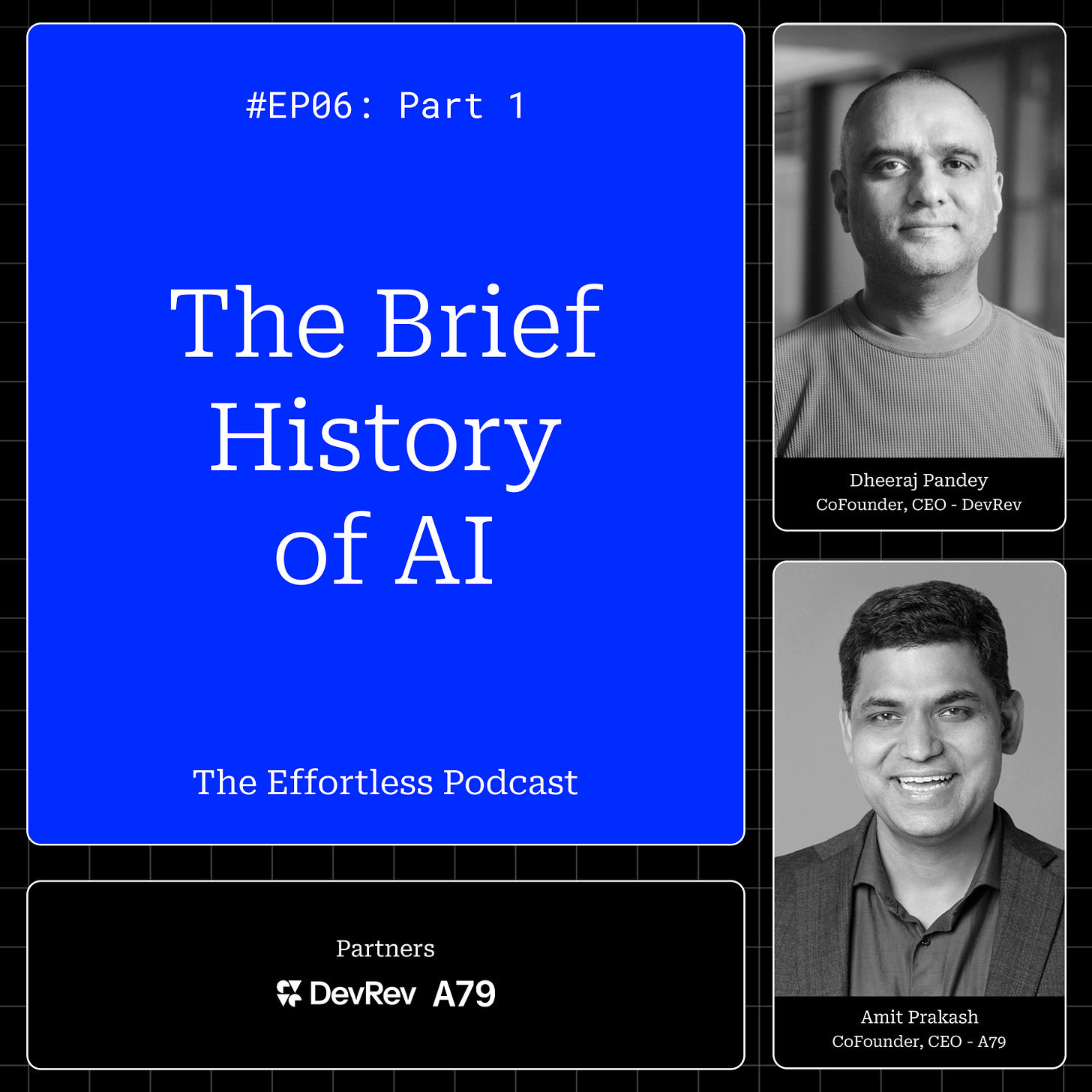

Hosts:

Dheeraj Pandey - Co-Founder & CEO of DevRev, former CEO of Nutanix

Amit Prakash - Co-Founder & CTO of ThoughtSpot, former engineer at Google and Microsoft

Summary

In this episode, Dheeraj and his guest take us through a deep dive into the origins of artificial intelligence, touching on the decades-long journey AI has taken. The discussion explores AI’s evolution from early neural networks, groundbreaking discoveries in biology, and the advances in computing power to modern-day developments that include neural nets, GPUs, and the rise of deep learning. It offers insights into the concepts that form AI's foundation and their parallels to other scientific fields, like biology and operations research, giving listeners a clearer understanding of AI’s complex trajectory.

Key Takeaways

The Evolutionary Path of AI: AI’s development has been a cyclical journey of progress and setbacks, with cycles of hype and innovation recurring roughly every 10 to 15 years.

Influences from Biology: Early AI concepts were inspired by human biology, especially the functioning of neurons in the brain.

Layers and Functions in Neural Networks: Neural networks use weighted inputs and nonlinear functions to emulate how neurons work, with a focus on learning and pattern recognition.

Computational Milestones: The progression from simple to complex instruction sets in computing (CISC vs. RISC) and the introduction of GPUs significantly accelerated AI’s growth.

Limitations of RNNs and Advancements with CNNs: Recurrent neural networks (RNNs) encountered challenges with context and memory, prompting developments like convolutional neural networks (CNNs) and later the revolutionary 2017 “Attention is All You Need” paper.

The Importance of ImageNet: ImageNet provided labeled data at scale, becoming a benchmark that fueled major advances in computer vision.

In-Depth Insights

The Cyclical Nature of AI's Evolution

AI's journey has been compared to a rollercoaster, with cycles of breakthroughs followed by setbacks as expectations often outpaced technology. The promise of AI in the 1950s and 1960s, for instance, was dampened by the limitations of hardware and understanding, a pattern that repeated itself until significant advances in computing power and algorithmic training became possible.

Biological Inspiration and the Concept of Neurons

Inspired by biological neurons, early AI researchers aimed to emulate the brain’s basic structure. The concept of neurons as “Lego blocks” that could be rearranged for various tasks inspired neural network models. Researchers observed that each neuron processes inputs based on weighted connections, which form the basis of the learning function in artificial neurons. This insight allowed early neural networks to simulate a “learning” process, foundational to pattern recognition.

Evolution of Neural Networks and Convergence Challenges

The development of multi-layered neural networks introduced complexity, requiring methods like gradient descent to adjust neural network parameters (weights) for minimized error. However, the challenge of convergence—aligning neural networks to yield consistent outcomes—hindered early models, causing setbacks until innovations in optimization techniques like backpropagation emerged in the 1980s.

The Advent of GPUs and the Importance of Parallel Processing

The transition from CPUs to GPUs enabled AI to flourish due to GPUs’ ability to handle parallel tasks efficiently. In contrast to CPUs, which excel in executing a sequence of complex tasks, GPUs were ideal for the simpler, matrix-based data processing in neural networks, especially for visual and spatial data.

The Impact of ImageNet on AI’s Progress

In 2010, ImageNet—a large-scale database of labeled images—became a pivotal dataset for AI. By providing standardized data, it enabled comparative testing of algorithms and sparked advancements in image classification and computer vision. This milestone highlighted the critical role of high-quality, labeled data in training AI models.

Recurrent Neural Networks (RNNs) and the Memory Challenge

RNNs brought memory to neural networks, allowing them to process sequential data by feeding outputs back as inputs, which is crucial for natural language processing. However, RNNs faced problems with long-term context, where gradient values would either “explode” or “vanish” over multiple steps. This limitation led to the need for new architectures capable of better contextual understanding.

2017 and the Breakthrough with Transformers

The limitations of RNNs set the stage for the 2017 “Attention is All You Need” paper, which introduced the transformer architecture. This model eliminated the need for recurrence by allowing direct access to distant context in data, solving the problem of long-term dependencies in sequence processing and leading to significant advances in natural language processing.

Host Biographies

Amit Prakash

Co-founder and CTO at ThoughtSpot, previously at Google and Microsoft. Amit has an extensive background in analytics and machine learning, holding a Ph.D. from UT Austin and a B.Tech from IIT Kanpur.Dheeraj Pandey

Co-Founder and CEO of DevRev, and former CEO of Nutanix. Dheeraj has led multiple tech ventures and is passionate about AI, design, and the future of product-led growth.

Episode Breakdown

{00:00:00} Introduction to AI’s Trajectory: Cycles of hype, excitement, and setbacks every 10 to 15 years.

{00:00:26} Recap of Previous Episodes: Listener feedback on deep dives into AI and product management.

{00:01:00} AI’s Parallels with Biology and Technology: 50-year development cycles from DNA’s double helix to mRNA and from ENIAC to mobile computing.

{00:02:59} The Cyclical Nature of AI Progress: Compared to early struggles in computing, such as the "paperless office" concept.

{00:04:00} The First Era of AI in the 1950s and 1960s: Mathematical foundations, interpolation, and convergence challenges.

{00:05:00} Biology’s Influence on AI: From understanding the brain’s neuron structures to mathematical modeling of neural networks.

{00:06:00} Neural Basics: Neurons, synapses, and the concept of weighted inputs and nonlinear functions.

{00:07:00} Neural Connectivity and Brain Adaptability: How neurons can “retrain” for new functions.

{00:08:00} Sensory Substitution Experiments: Seeing through touch, highlighting brain plasticity.

{00:09:00} The Mathematical Version of the Neuron: How input vectors and weight matrices lead to weighted sums and nonlinear activation functions.

{00:10:15} Key Definitions: Vectors, matrices, and their differences, with real-life analogies.

{00:11:00} Convergence in Neural Networks: Challenges with linear separability and the XOR problem.

{00:15:00} The 1980s and 1990s: Introducing gradient descent, simulated annealing, and backpropagation to address convergence issues.

{00:17:41} XOR and Non-Linear Separability: Why XOR posed a key mathematical obstacle for early neural networks.

{00:18:59} Backpropagation and Error Functions: Minimizing error through derivative-based adjustments to weights.

{00:20:00} Introduction to Gradient Descent: How small adjustments are calculated to find the local or global minimum.

{00:24:13} Concept of Gradient Descent: A visual analogy of water seeking the lowest point in a valley.

{00:25:00} Simulated Annealing: Adding controlled randomness to escape local minima and discover more optimal solutions.

{00:28:00} Real-Life Applications of Simulated Annealing and Entropy: Examples from finance and physics.

{00:29:47} Exploring Backpropagation: Adjusting weights from outer to inner layers in multi-layer networks.

{00:31:00} Challenges of Large Networks: Computing billions of partial derivatives for efficient training.

{00:32:33} Operations Research Insights in AI Optimization: Parallels to the classic traveling salesman and max-flow min-cost problems.

{00:34:59} Convex vs. Non-Convex Functions in Optimization: Why convexity simplifies finding the global minimum.

{00:37:00} 2000s and Beyond: Enter GPUs, the rise of parallel processing, and their impact on deep learning.

{00:40:00} Differences Between CPU and GPU Architectures: CISC (Intel) and RISC (ARM/NVIDIA) approaches.

{00:43:00} Data Structure Shifts: From single-cell random access memory to structured matrix processing for efficient data handling.

{00:47:42} Emergence of SIMD (Single Instruction Multiple Data): Parallel data processing, a cornerstone for GPUs.

{00:49:55} 2010s and the ImageNet Revolution: Labeled datasets enable huge strides in image classification and computer vision.

{00:50:57} Convolutional Neural Networks (CNNs) for Visual Processing: How convolution operations mimic real-world filtering.

{00:52:09} Understanding CNNs Through Convolution: Smoothing and edge detection for image analysis.

{00:54:07} Rise of RNNs: How recursion adds memory for handling sequential data like language and time series.

{00:57:00} Introduction of RNNs and the Vanishing/Exploding Gradient Problem: Limiting RNNs’ effectiveness with long sequences.

{01:00:00} Importance of Context and Memory: Why RNNs faced limitations with deep, long-term dependencies.

{01:03:00} Limitations of RNNs in Modeling Far-Reaching Context: The inability to effectively reference “distant” information in text.

{01:07:00} Dynamic Programming Comparisons: Setting the stage for future breakthroughs in context handling with the 2017 transformer model.

References and Resources

Foundational Concepts and Mathematics

Weighted Sum of Inputs: Understanding weighted sums in neural networks.

Interpolation and Convergence: Exploring the basics of convergence in AI.

XOR Problem in Neural Networks: Why XOR challenged early neural networks.

Optimization Techniques

Backpropagation: The key algorithm for training neural networks.

Gradient Descent: A simple explanation of gradient descent.

Simulated Annealing: Using randomness in optimization.

Parallel Computing and Hardware Evolution

GPUs and SIMD Architecture: The rise of GPUs for AI workloads.

Broader Context and Thought Leadership

Convex vs. Non-Convex Functions: Why convexity matters in optimization.

Nicholas Taleb's "Fooled by Randomness": How randomness shapes outcomes.

AI’s progress has been anything but linear, marked by a series of breakthroughs often followed by challenging periods. From early neural networks inspired by biology to the computational leaps afforded by GPUs, each phase has set the stage for the next, culminating in pivotal moments like ImageNet and, later, the Transformer model. This episode underscores the importance of interdisciplinary insights, hardware innovations, and the role of data in AI’s ongoing journey. With these foundations in place, AI's potential seems closer to being realized than ever before.