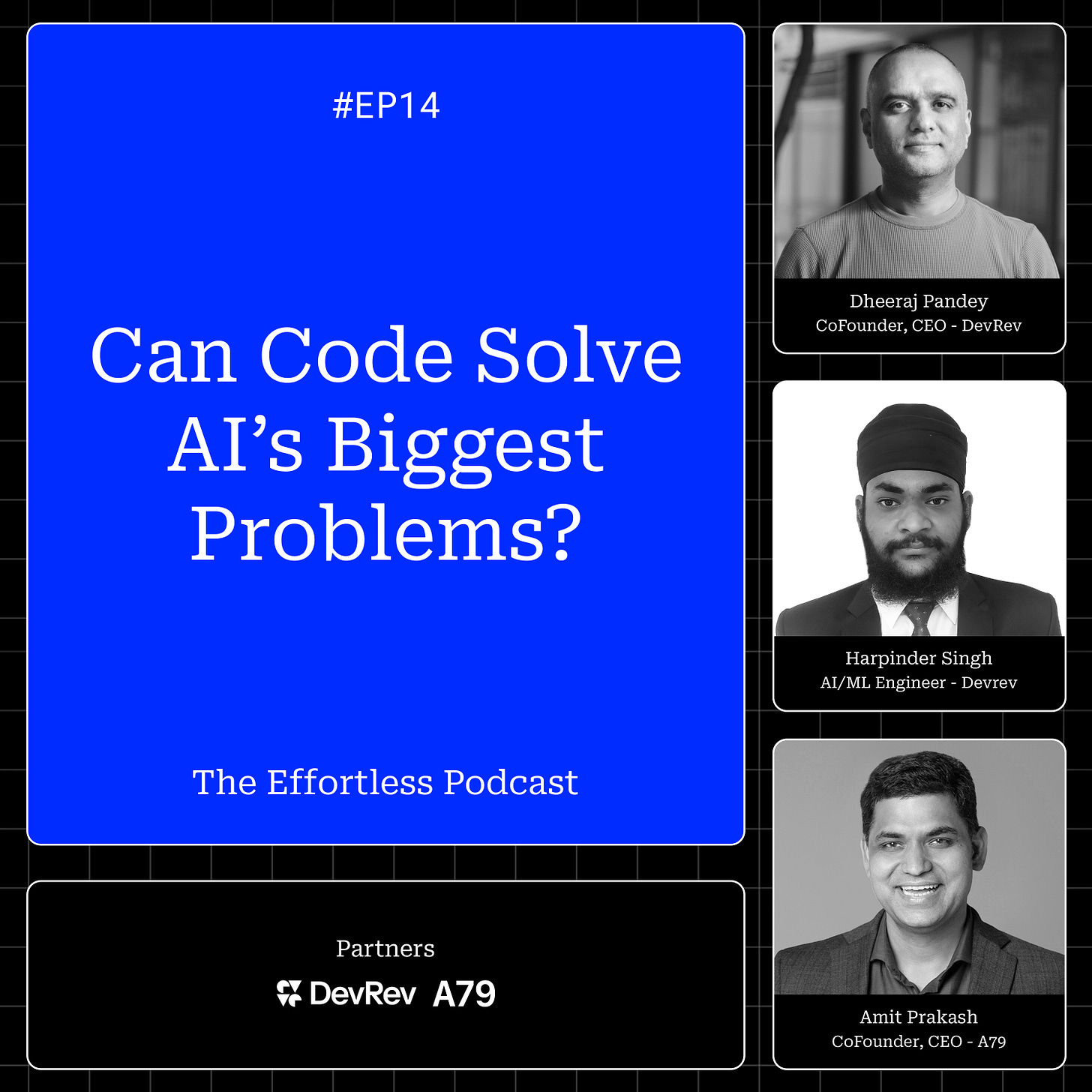

Hosts

Dheeraj Pandey – Co-founder and CEO of DevRev, former CEO of Nutanix

Amit Prakash – Co-founder and CTO of ThoughtSpot, former engineer at Google and Microsoft

Guest

Harpinder Jot Singh – AI/ML Engineer at DevRev, focusing on enhancing large language models (LLMs) and integrating AI into enterprise workflows. Formerly an engineering graduate, joined DevRev in 2021.

Summary

In this episode of The Effortless Podcast, Harpinder Singh (Happy) dives into the rapidly evolving world of AI, discussing his journey at DevRev and the company’s focus on AI-driven innovations. Additionally, Happy reflects on his decision to stay in India despite opportunities abroad, noting the growing tech ecosystem and the increasing potential for innovation locally. Happy shares insights on the shift from federated to integrated systems in AI, highlighting how integrated systems offer better reliability and performance by centralizing data.

The discussion also explores function calling in AI, which allows language models to interact with external systems in real-time to perform complex tasks. Happy delves into the CodeAct paper, which proposes replacing traditional tool calls with Python code execution, enabling LLMs to execute more complex operations efficiently. The conversation wraps up with a focus on DevRev’s AI-powered workflow automation, which is making enterprise solutions more accessible and enabling companies to automate tasks without needing deep technical knowledge.

Key Takeaways

AI's Evolution- Function calling has revolutionized how LLMs interact with the world by enabling real-time data retrieval and task execution through API calls and external systems.

Federated vs. Integrated System-: Federated systems leave data in legacy systems, offering flexibility but facing challenges in speed and reliability. Integrated systems bring data together, offering lower latency and more consistent performance but requiring more infrastructure.

CodeAct's Potential- The CodeAct paper proposes using code execution (e.g., Python) instead of traditional tool calls to create more flexible, efficient LLM agents capable of handling complex tasks with fewer interactions.

India's Tech Ecosystem- While historically seen as a hub for outsourcing, India is rapidly becoming a destination for innovation and talent, with more global companies expanding and growing opportunities for local developers.

AI for Enterprises- DevRev’s focus on AI-driven workflow automation seeks to streamline enterprise operations, enabling companies to automate complex processes without deep technical expertise, making AI more accessible.

LLM Training and Fine-Tuning- The transition from pre-training (predicting next tokens) to post-training (following instructions and human preferences) is key to improving LLM utility, with reinforcement learning playing a vital role in aligning models with human feedback.

Python Code and Turing Completeness- Python’s flexibility makes it an ideal language for LLM agents to perform complex operations, from looping to conditional logic, which was previously impossible with traditional function calling methods.

Future of AI Agents- The next stage for AI agents involves enhancing their ability to generate and execute code, leading to more powerful and autonomous systems that can handle increasingly complex, real-world tasks.

In-Depth Insights

1. Happy’s Journey into AI

Harpinder "Happy" Singh’s journey into AI started after graduating and joining DevRev in 2021, where he quickly became a key contributor to the company’s AI initiatives. His focus is on enhancing LLMs (large language models) and integrating AI into enterprise workflows. Happy's work is centered around improving how AI models interact with real-world systems, streamlining tasks, and making AI more practical for day-to-day business operations.

2. The Power of Python in AI

A major theme discussed in the episode is the role of Python in AI, especially in the context of the CodeAct paper. Happy explains how Python’s flexibility makes it an ideal language for LLMs to execute complex tasks, such as looping, making conditional decisions, and using powerful libraries like pandas and NumPy. This capability allows models to go beyond simple tool calls and handle more intricate, multi-step tasks. Python's Turing completeness gives models the ability to perform more complex operations, offering AI systems greater autonomy in executing real-world tasks.

3. The Power of Function Calling in AI

One of the most transformative developments in AI is function calling, which allows LLMs to execute real-time tasks by interacting with external systems. This shift represents a move beyond simply generating text and into executing tasks that require live data retrieval, API calls, and complex decision-making. Happy highlights that this capability dramatically enhances the flexibility of AI systems, enabling them to solve more complex problems autonomously.

4. User, Agent, and Environment Model

Happy breaks down the user-agent-environment model, which is key to understanding how AI systems, especially LLMs, interact with the world. In this model:

User: The individual or system that interacts with the AI to request a task or query.

Agent: The AI model itself, responsible for processing the user’s input and generating the appropriate responses.

Environment: The external systems or data sources the agent interacts with to complete the task. This could include databases, APIs, or other external services that provide necessary data or functionality.

The environment plays a crucial role in how LLMs can interact with the world. Happy highlights how AI models like LLMs, when empowered with the ability to execute Python code, can interact with environments more dynamically. This shift from simple API calls to executing Python code enables AI to perform more complex operations, such as manipulating data, making decisions, and processing results in real time.

5. CodeAct and the Shift to Python Execution

The CodeAct paper introduces an exciting shift: replacing traditional tool calls (e.g., getting weather data or booking flights) with Python code execution. Happy explains how this move enables LLMs to perform multi-step operations more effectively. Rather than having to call multiple tools sequentially (which can be time-consuming), the agent can execute a single Python script to handle the task from start to finish. This reduces the number of interactions between the user, agent, and environment, allowing for faster and more efficient problem-solving.

6. Federated vs. Integrated Systems in AI

Happy discusses the trade-offs between federated and integrated systems. Federated systems, which leave data in its original sources, provide more flexibility but introduce latency and reliability issues. In contrast, integrated systems centralize data for better performance and consistency. DevRev’s use of integrated systems enables faster, more reliable AI-driven workflows, improving real-time decision-making and reducing the complexity of managing multiple systems.

Host Biographies

Dheeraj Pandey

Co-founder and CEO of DevRev, and former CEO of Nutanix. Dheeraj has led multiple tech ventures and is passionate about AI, design, and the future of product-led growth.

LinkedIn | X (Twitter)

Amit Prakash

Co-founder and CTO of ThoughtSpot. Formerly an engineer at Google and Microsoft. Amit has deep expertise in distributed systems, data platforms, and machine learning.

LinkedIn | X (Twitter)

Guest Biography

Harpinder Jot Singh

AI/ML Engineer at DevRev, focusing on cutting-edge advancements in large language models (LLMs) and AI-driven workflows. Harpinder joined DevRev in 2021, where he has contributed significantly to the company's AI initiatives, including the integration of function calling and improving LLM capabilities.

LinkedIn

Episode Breakdown

{00:00:00} – Introduction to Happy – Dheeraj and Amit introduce Harpinder "Happy" Singh, discussing his journey at DevRev and the role he plays in AI development.

{00:03:30} – Happy’s Early Background – Growing up in Shahjahanpur, attending BITS Pilani, and discovering his passion for engineering and computer science.

{00:07:00} – The Transition to AI – Happy’s move from workflow engineering to AI, and what excites him about the LLM space.

{00:13:30} – Bangalore Life and Growing with DevRev – On moving away from home, taking responsibility, and maturing quickly while growing with the company.

{00:18:30} – Federated vs. Integrated Systems – What these architectures mean for AI reliability, search latency, and permissioning.

{00:25:00} – Python and the Power of Code – Why Python matters in AI: loops, conditions, execution, and why code > tool calls.

{00:32:00} – User, Agent, and Environment – How AI agents work by sensing the environment, acting, and evolving intelligently.

{00:39:30} – The CodeAct Paper – Why generating executable Python unlocks richer behavior than single-step function calling.

{00:47:00} – AI and Business Automation – How DevRev is using AI for internal workflows and building agent-first infrastructure.

{01:02:30} – Looking Ahead at DevRev – Enabling no-code orchestration, Turing-complete agents, and integrating LLMs with enterprise systems.

{01:10:00} – Final Reflections – Happy on AI’s potential to reshape industries through real-time, intelligent automation.

References and Resources

CodeAct Paper

The Executable CodeActions Elicit Better LLM Agents paper explores the idea of replacing traditional tool calls with Python code execution for more complex and efficient LLM operations. This approach allows language models to handle tasks more flexibly and autonomously.

Learn more: CodeAct Paper

InstructGPT Paper

This paper from OpenAI introduced the concept of fine-tuning large language models for instruction-following, improving their ability to generate responses aligned with human preferences using techniques like reinforcement learning with human feedback (RLHF).

Learn more: InstructGPT Paper

Reinforcement Learning with Human Feedback (RLHF)

RLHF is a key technique used in post-training for aligning models with human preferences by providing a feedback loop that helps models improve their responses based on human-labeled data.

Learn more: RLHF Overview

LLMs and Function Calling

This article explores the recent advancements in function calling, which enable large language models (LLMs) to execute functions in real-time, helping AI systems perform complex, real-world tasks.

Learn more: Function Calling in LLMs

DevRev AI Solutions

DevRev leverages AI-driven workflows to automate and optimize enterprise operations, helping companies scale more efficiently by integrating AI into their daily processes.

Learn more: DevRev Official Website

Meta's Tool Formalization Paper

This paper from Meta discusses how LLMs can predict and use tools like web search APIs to interact with external data in a more structured way, laying the groundwork for current function calling systems.

Learn more: Meta’s Tool Formalization Paper

OpenAI's API

OpenAI’s API is a powerful tool for developers to integrate AI into their applications. It allows for real-time querying and function calling with various LLMs, enabling complex integrations and interactions with external systems.

Learn more: OpenAI API

Effortless Podcast on DeepSeek

This podcast episode delves into the DeepSeek model, which represents a new approach in AI reasoning by utilizing reinforcement learning with verifiable rewards and establishing better ways to handle large-scale tasks.

Learn more: Effortless Podcast on DeepSeek

Context-Free Grammar Generation

A technique used to generate structured outputs, often used in the training of LLMs to produce valid JSON or other structured formats for easier integration with external systems.

Learn more: Context-Free Grammar Overview

Gorilla Paper by Berkeley University

The Gorilla paper explores the concept of training LLMs to interact with a wide range of tools and APIs by enabling function calling, creating an environment for LLMs to manage multiple tool interactions.

Learn more: Gorilla Paper by Berkeley

Conclusion

AI and machine learning are evolving at an astonishing rate, and as Harpinder Singh (Happy) discusses, the future of AI is not just about faster processing but about enabling systems to perform complex tasks with greater autonomy and efficiency. From the advancements in function calling to the groundbreaking CodeAct paper, AI is becoming more adaptable, allowing models to generate and execute code in real-time, making them more flexible in handling real-world challenges. The debate between federated and integrated systems continues, with both approaches offering unique advantages for different use cases.

As AI integrates more deeply into enterprise solutions, companies like DevRev are leading the way by making these technologies more accessible, streamlining workflows, and automating processes without requiring deep technical expertise. With innovations like CodeAct, the next phase of AI promises a future where models are not only more efficient but can tackle a wider range of tasks with fewer interactions. This episode provides a glimpse into an exciting future where AI isn’t just a tool but a partner in innovation. As we continue to explore and develop these technologies, we’re on the brink of a transformative shift that will redefine how businesses and industries operate.

Stay tuned for more deep dives into the effortless world of innovation.

Share this post